blog

Some useful thoughts

Tag Extraction Project with spaCy-LLM

Read more

Tag Extraction Project with spaCy-LLM

July 30, 2024

Introduction

This tutorial will guide you through the process of creating a tag extraction system using spaCy and the Language Model (LLM) integration. The project aims to extract relevant tags from text in multiple languages, utilizing the power of large language models for accurate and context-aware tag generation.

Project Overview

The tag extraction project consists of two main components:

spacy_main.py: The main module that handles configuration loading and tag extraction.tag_extractor.py: A module containing the core logic for tag extraction using LLM.

The project also includes configuration files, templates, and example data for different languages.

Prerequisites

Before starting, ensure you have the following installed:

- Python 3.7+

- spaCy

- spaCy-LLM

- PyYAML

- python-dotenv

- Jinja2

You can install these dependencies using pip:

pip install spacy spacy-llm pyyaml python-dotenv jinja2Project Structure

The project is organized as follows:

.

├── spacy_main.py

├── tag_extractor.py

├── configs/

│ └── config_en.cfg

├── templates/<>

│ └── tag_extractor_template_en.jinja

├── example/

│ └── en_tags_few_shot.yaml

└── .env

Setting Up the Environment

- Create a

.envfile in the project root directory to store your API keys and other sensitive information. - Add your OpenAI API key to the

.envfile: - Ensure that the project root is in your Python path. You can do this by adding the following line to your script or setting the

PYTHONPATHenvironment variable:

OPENAI_API_KEY=your_api_key_hereimport sys

import os

sys.path.append(os.path.dirname(os.path.abspath(__file__)))Configuration Files

The project uses configuration files to set up the spaCy pipeline and LLM integration. Let's look at the config_en.cfg file for English:

[paths]

tag_extractor_template = "./templates/tag_extractor_template_en.jinja"

examples_path = "./example/en_tags_few_shot.yaml"

[nlp]

lang = "en"

pipeline = ["llm_tags"]

[components]

[components.llm_tags]

factory = "llm"

[components.llm_tags.task]

@llm_tasks = "spacy-tag-extractor.TagExtractor.v1"

n_tags = 10

template = ${paths.tag_extractor_template}

examples_path = ${paths.examples_path}

[components.llm_tags.model]

@llm_models = "spacy.GPT-3-5.v3"

name = "gpt-3.5-turbo"

config = {"temperature": 0.001}

[components.llm_tags.cache]

@llm_misc = "spacy.BatchCache.v1"

path = "cache/en"

batch_size = 64

This configuration file sets up the spaCy pipeline with the LLM tag extractor component, specifies the paths for the template and examples, and configures the GPT-3.5-turbo model for tag extraction.

Tag Extraction Template

The project uses a Jinja2 template to generate prompts for the LLM. Here's an example of the template content:

# IDENTITY and PURPOSE

You are an AI assistant specialized in extracting relevant and concise tags from news articles. Your primary role is to analyze the given text and identify the most significant keywords or phrases that encapsulate the main topics, themes, or key elements of the article. You are adept at distilling complex information into a set number of precise, meaningful tags.

Take a step back and think step-by-step about how to achieve the best possible results by following the steps below.

# STEPS

- Read and comprehend the entire news article carefully.

- Identify the main topics, themes, and key elements of the article.

- Create a list of potential tags based on the most important and relevant information.

- Refine the list to select exactly the number of tags specified in the prompt.

- Ensure the selected tags are concise and accurately represent the article's content.

- Format the tags as requested in the output instructions.

# OUTPUT INSTRUCTIONS

- Only output Markdown.

- Provide exactly {{ n_tags }} tags.

- The tags should be relevant to the news article and concise.

- Present the tags separated by commas, without any additional text or formatting.

- Do not number the tags or add any prefixes.

- Do not include any explanations or additional information beyond the tags themselves.

- Ensure you follow ALL these instructions when creating your output.

{% if examples %}

Examples:

{% for example in examples %}

Text: {{ example.text }}

Tags: {{ example.tags | tojson }}

{% endfor %}

{% endif %}

Text to tag:

{{ text }}

Tags:

This template provides clear instructions to the LLM on how to extract tags from the given text.

Core Components

ConfigLoader

The ConfigLoader class in spacy_main.py handles loading configuration files and associated resources for different languages:

class ConfigLoader:

def __init__(self, base_dir: str = "."):

self.base_dir = Path(base_dir)

self.config_dir = self.base_dir / "configs"

self.example_dir = self.base_dir / "example"

self.template_dir = self.base_dir / "templates"

def get_paths(self, lang: str) -> Dict[str, str]:

config_path = self.config_dir / f"config_{lang}.cfg"

example_path = self.example_dir / f"{lang}_tags_few_shot.yaml"

template_path = self.template_dir / f"tag_extractor_template_{lang}.jinja"

if not config_path.exists():

raise FileNotFoundError(f"Configuration file for language '{lang}' not found.")

return {

"config": str(config_path),

"example": str(example_path) if example_path.exists() else None,

"template": str(template_path) if template_path.exists() else None

}TagExtractor

The TagExtractor class in spacy_main.py is responsible for loading the spaCy model and extracting tags from text:

class TagExtractor:

def __init__(self, config_loader: ConfigLoader):

self.config_loader = config_loader

self.nlp_models = {}

def _load_model(self, lang: str) -> spacy.language.Language:

if lang not in self.nlp_models:

paths = self.config_loader.get_paths(lang)

config = spacy.util.load_config_from_str(open(paths["config"]).read())

if paths["example"]:

config["components"]["llm_tags"]["task"]["examples_path"] = paths["example"]

if paths["template"]:

config["components"]["llm_tags"]["task"]["template"] = paths["template"]

self.nlp_models[lang] = spacy.util.load_model_from_config(config, auto_fill=True)

return self.nlp_models[lang]

def extract_tags(self, text: str, lang: str, n_tags: int) -> List[str]:

nlp = self._load_model(lang)

if not spacy.tokens.Doc.has_extension("article_tags"):

spacy.tokens.Doc.set_extension("article_tags", default=None)

llm_component = nlp.get_pipe("llm_tags")

llm_component.task.n_tags = n_tags

doc = nlp(text)

tags = doc._.get("article_tags")

if tags is None:

raise ValueError("Tag extraction failed. No tags were generated.")

return tagsTagExtractorTask

The TagExtractorTask class in tag_extractor.py implements the core logic for tag extraction:

class TagExtractorTask:

def __init__(self, n_tags: int, template_loader: TemplateLoader,

response_parser: ResponseParser, template_path: Optional[str] = None,

examples: Optional[List[Dict[str, Any]]] = None):

self.n_tags = n_tags

self.template_loader = template_loader

self.response_parser = response_parser

self.template_path = template_path

self.template = self._load_template()

self.examples = examples

def generate_prompts(self, docs: Iterable[Doc]) -> Iterable[str]:

for doc in docs:

yield self.template.render(text=doc.text, n_tags=self.n_tags, examples=self.examples)

def parse_responses(self, docs: Iterable[Doc], responses: Iterable[str]) -> Iterable[Doc]:

for doc, response in zip(docs, responses):

try:

tags = self.response_parser.parse(response, self.n_tags)

doc._.set("article_tags", tags)

except ValueError as e:

print(f"Error parsing response for doc {doc.text[:50]}...: {str(e)}")

doc._.set("article_tags", [])

yield docUsage

To use the tag extraction system, you can create a script that initializes the environment, creates a TagExtractor instance, and calls the extract_tags method:

from spacy_main import initialize_environment, create_tag_extractor

def main():

initialize_environment()

extractor = create_tag_extractor()

text = "Your input text here..."

lang = "en"

n_tags = 10

try:

tags = extractor.extract_tags(text, lang, n_tags)

print(f"Extracted tags: {tags}")

except Exception as e:

print(f"Error extracting tags: {str(e)}")

if __name__ == "__main__":

main()Customization

You can customize the tag extraction process by modifying the configuration files, templates, and examples for each language. This allows you to adapt the system to different domains or specific requirements.

Troubleshooting

- API Key Issues: Ensure that your OpenAI API key is correctly set in the

.envfile and that the file is in the project root directory. - Import Errors: If you encounter import errors, make sure that the project root is in your Python path.

- File Not Found Errors: Double-check that all configuration files, templates, and examples are in the correct directories as specified in the

ConfigLoaderclass. - LLM Response Parsing Errors: If you encounter errors parsing the LLM response, review the

JSONResponseParserclass intag_extractor.pyand ensure that the LLM is generating responses in the expected format.

Conclusion

This tutorial has guided you through the process of setting up and using a tag extraction system with spaCy and LLM integration. The project demonstrates how to leverage large language models for accurate and context-aware tag generation, with support for multiple languages.

Future enhancements could include:

- Adding support for more languages

- Implementing additional LLM models

- Creating a web interface for easy tag extraction

- Optimizing performance for large-scale processing

- Implementing more advanced caching mechanisms

By understanding and building upon this project, you can create powerful natural language processing applications that benefit from the latest advancements in language models.

Integrating Large Language Models with spaCy for Enhanced URL Topic Classification

Read more

Integrating Large Language Models with spaCy for Enhanced URL Topic Classification

December 18, 2023

This guide provides a detailed walkthrough on integrating Large Language Models (LLMs) with spaCy through spacy-llm, specifically focusing on classifying URLs into precise topics. The core of this integration involves setting up a config.cfg for the pipeline, scripting in Python for URL classification, and utilizing few-shot learning via a web_cat_tr.yml file for training.

Configuration (`config.cfg`):

To enable text classification into categories such as Education, Entertainment, and Technology, the configuration file is critical. It outlines the structure of the pipeline, including the model and task specifications, alongside the desired labels for classification:

[nlp]

lang = "en"

pipeline = ["llm"]

[components]

[components.llm]

factory = "llm"

[components.llm.task]

@llm_tasks = "spacy.TextCat.v3"

exclusive_classes = True

labels = ["Education", "Entertainment", "Technology"]

label_definitions = {

"Education": "Websites offering educational materials, online courses, and scholarly resources.",

"Entertainment": "Portals related to movies, music, gaming, and cultural festivities.",

"Technology": "Sites that cover the latest in tech, product reviews, and how-to guides."

}

[components.llm.model]

@llm_models = "spacy.GPT-3-5.v1"

name = "gpt-3.5-turbo-0613" # Alternative: gpt-4 or other models

config = {"temperature": 0.001}

[components.llm.task.examples]

@misc = "spacy.FewShotReader.v1"

path = "training.yml"

Main Script (`main.py`):

The Python script is engineered to classify URLs into topics using the configured spaCy model. It initiates by setting up environment variables and parsing command-line arguments for the URL and the configuration path. The script then proceeds to classify the given URL:

from spacy_llm.util import assemble

from dotenv import load_dotenv

import argparse

import os

import sys

load_dotenv()

def setup_args():

parser = argparse.ArgumentParser(description='URL topic recognition')

parser.add_argument('--url', '-u', type=str, required=True, help='URL to be classified')

parser.add_argument('--spacy-config-path', '-c', type=str, default='config.cfg', help='Path to the spaCy config file')

return parser.parse_args()

def topic_recognition(url, spacy_config_path='config.cfg'):

try:

if not os.path.exists(spacy_config_path):

raise FileNotFoundError(f"The spaCy config file at {spacy_config_path} was not found.")

nlp = assemble(spacy_config_path)

doc = nlp(url)

if not doc.cats:

raise ValueError("No categories detected. Check model configuration.")

max_category = max(doc.cats, key=doc.cats.get)

return max_category

except Exception as e:

print(f"Error in topic recognition: {e}", file=sys.stderr)

return None

def main():

args = setup_args()

category = topic_recognition(args.url, args.spacy_config_path)

if category:

print(f"Predicted Category: {category}")

else:

print("URL classification failed. Refer to error messages.")

if __name__ == "__main__":

main()

Training File (`training.yml`):

The few-shot training approach leverages training.yml, a file containing examples of URLs with associated categories. This methodology aids in refining the model's accuracy for URL classification:

- text: https://example.edu/online-courses/

answer: Education

- text: https://example.com/latest-movie-reviews/

answer: Entertainment

- text: https://techblog.example.com/new-smartphone-launch/

answer: Technology

- text: https://example.com/concerts-2024/

answer: Entertainment

- text: https://example.edu/science-for-kids/

answer: Education

- text: https://techguide.example.com/choosing-the-right-laptop/

answer: Technology

Usage Example:

To classify a URL, execute the following command:

python main.py --url https://example.com/latest-movie-reviews/ --spacy-config-path config.cfgExpected output:

EntertainmentFor more detailed information and updates, visit the official GitHub repository at https://github.com/explosion/spacy-llm. This guide ensures a comprehensive understanding and application of spacy-llm for URL topic classification, embedding professionalism and technical depth for users and developers alike.

Leveraging LLMs for Efficient Web Content Analysis: The Markdown Advantage

Read more

Leveraging LLMs for Efficient Web Content Analysis:

The Markdown Advantage

January 5, 2023

In the rapidly evolving field of natural language processing (NLP), Large Language Models (LLMs) stand out for their ability to understand and generate human-like text. However, when tasked with processing web content, LLMs often encounter a significant hurdle: the token constraint. Each piece of data processed by an LLM is broken down into tokens, and due to architectural limits, there's a maximum number of tokens these models can handle at once. Web pages, rich in HTML structure and content, can quickly exceed this limit, leading to slower processing times and diminished efficiency.

This is where the ingenious solution of transforming HTML content into Markdown (MD) proves invaluable. Markdown, a lightweight markup language with plain-text formatting syntax, offers a simpler, more concise representation of web content. By converting HTML to Markdown, we significantly reduce the number of tokens the LLM needs to process. This not only accelerates the analysis but also makes it more cost-effective, a crucial factor for large-scale NLP projects.

One tool that brilliantly facilitates this conversion process is the markdownify library. This Python library effortlessly transforms HTML into Markdown, retaining the essential elements of the original content while stripping away the excess HTML baggage. By leveraging markdownify, developers and data scientists can prepare web content in a format that's more palatable for LLMs, enabling faster and more accurate content analysis and scraping.

The benefits of this approach are manifold:

- Enhanced ability for LLMs to analyze web content for sentiment analysis, keyword extraction, and summarization without getting bogged down by the verbose nature of HTML.

- Reduced computational load on the models, paving the way for more sustainable and environmentally friendly AI practices.

- Improved efficiency in processing large volumes of web data, making it ideal for big data analytics projects.

- Cost-effective solution for large-scale NLP projects, as fewer tokens mean lower processing costs.

- Faster processing times, allowing for real-time or near-real-time content analysis.

As we continue to push the boundaries of what's possible with Large Language Models, it's innovative solutions like the conversion of HTML to Markdown that will enable us to overcome technical challenges and unlock new potentials in the realm of NLP. The markdownify library represents a key tool in this ongoing endeavor, offering a bridge between the complex structure of the web and the streamlined needs of language models. As we refine these processes, the promise of LLMs in understanding and leveraging web content has never been brighter.

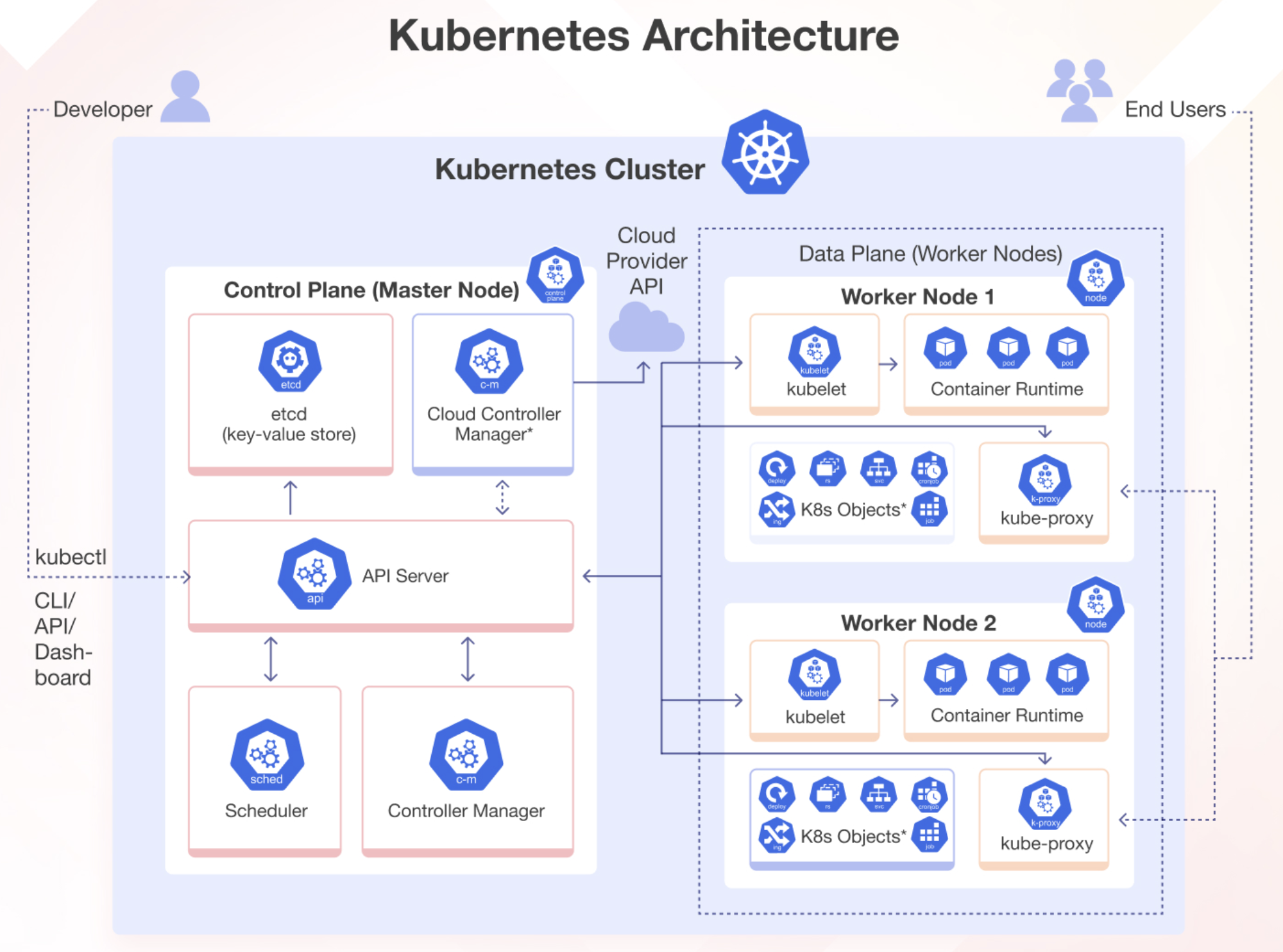

Kubernetes: Orchestrating the Future of Cloud-Native Applications

Read more

Kubernetes: Orchestrating the Future of Cloud-Native Applications

December 27, 2022

In the ever-evolving landscape of cloud computing, Kubernetes has emerged as a powerhouse, revolutionizing how we deploy, scale, and manage containerized applications. But what makes Kubernetes so pivotal in modern software infrastructure, and why should developers and organizations pay attention?

Demystifying Kubernetes: More Than Just Container Orchestration

At its core, Kubernetes (often abbreviated as K8s) is an open-source platform that automates containerized application deployment, scaling, and management. Born in Google's labs and now thriving under the Cloud Native Computing Foundation (CNCF), Kubernetes has become the de facto standard for container orchestration. However, it's much more than that – it's an ecosystem, a philosophy, and a gateway to cloud-native architecture.

The Kubernetes Advantage: Key Features That Set It Apart

1. Intelligent Workload Placement and Auto-scaling

Kubernetes goes beyond simple container orchestration. Its scheduler intelligently places containers based on resource requirements and constraints, ensuring optimal utilization of your infrastructure. Moreover, Kubernetes can automatically scale your applications based on CPU usage, memory consumption, or custom metrics, adapting to traffic spikes in real-time.

2. Declarative Configuration and GitOps

One of Kubernetes' most powerful features is its declarative approach to configuration. You describe the desired state of your system, and Kubernetes works to maintain that state. This aligns perfectly with GitOps practices, where your entire infrastructure and application setup can be version-controlled, making rollbacks and audits a breeze.

3. Service Discovery and Load Balancing

Kubernetes simplifies networking complexities by providing built-in service discovery. It assigns a single DNS name for a set of pods and can load-balance traffic between them. This abstraction allows developers to focus on application logic rather than worrying about the underlying network details.

4. Stateful Workloads and Data Persistence

While Kubernetes excels at managing stateless applications, it also provides robust support for stateful workloads. Features like StatefulSets and persistent volumes allow databases and other stateful applications to run reliably in a Kubernetes cluster, opening up new possibilities for application architecture.

Kubernetes in Action: Real-World Applications

1. Microservices Architecture

Kubernetes is the ideal platform for microservices-based applications. Its ability to manage complex deployments makes it easier to develop, deploy, and scale individual services independently. Companies like Spotify and Airbnb leverage Kubernetes to manage their vast microservices ecosystems efficiently.

2. Edge Computing and IoT

With the rise of edge computing, Kubernetes is extending its reach beyond traditional data centers. Projects like K3s and MicroK8s are bringing Kubernetes to edge devices and IoT scenarios, enabling consistent management across diverse computing environments.

3. Machine Learning Operations (MLOps)

Kubernetes is becoming increasingly important in the ML/AI space. Platforms like Kubeflow leverage Kubernetes to streamline the deployment of machine learning pipelines, making it easier to move models from development to production.

The Kubernetes Ecosystem: Extending Capabilities

1. Service Mesh with Istio

Istio, when combined with Kubernetes, provides powerful traffic management, security, and observability features for microservices. It's becoming an essential tool for managing complex service-to-service communications.

2. Serverless on Kubernetes with Knative

Knative extends Kubernetes to provide a serverless experience, allowing developers to deploy serverless workloads alongside traditional containerized applications, all managed by Kubernetes.

3. Continuous Delivery with Argo CD

Argo CD is revolutionizing GitOps practices on Kubernetes, providing declarative, version-controlled application deployment and lifecycle management.

Challenges and Considerations

While Kubernetes offers immense benefits, it's important to acknowledge its complexity. The learning curve can be steep, and proper implementation requires significant expertise. Organizations must invest in training and possibly restructure their teams to fully leverage Kubernetes' potential.

1. Security Considerations

As Kubernetes environments become more complex, security becomes paramount. Implementing proper RBAC (Role-Based Access Control), network policies, and regularly updating clusters are crucial practices.

2. Monitoring and Observability

With the dynamic nature of Kubernetes environments, traditional monitoring approaches fall short. Tools like Prometheus and Grafana have become essential for gaining insights into Kubernetes clusters and applications.

The Future of Kubernetes: Trends to Watch

1. FinOps and Cost Optimization

As Kubernetes adoption grows, organizations are focusing on optimizing costs. Tools and practices around FinOps for Kubernetes are emerging, helping teams balance performance and cost-effectiveness.

2. GitOps and Infrastructure as Code

The GitOps model is gaining traction, with more organizations treating their infrastructure as code and managing it through Git repositories, enhancing collaboration and version control.

3. AI-Driven Operations

The future may see AI playing a bigger role in Kubernetes operations, from intelligent scaling decisions to predictive maintenance of clusters.

Conclusion: Embracing the Kubernetes Revolution

Kubernetes has transcended its role as a container orchestrator to become a comprehensive platform for cloud-native applications. Its rich ecosystem, robust features, and continuous evolution make it an indispensable tool for modern software development and operations. As we move towards more distributed, scalable, and resilient systems, Kubernetes stands at the forefront, shaping the future of how we build and run applications.

Whether you're a startup looking for scalability, an enterprise aiming for digital transformation, or a developer passionate about cloud-native technologies, understanding and leveraging Kubernetes is no longer optional – it's a necessity for staying competitive in the fast-paced world of modern software development.

Are you using Kubernetes in your organization? Share your experiences or challenges in the comments below. Let's learn from each other as we navigate this exciting technology!

Dendron: Revolutionizing Note-Taking and Knowledge Management

Read more

Dendron: Revolutionizing Note-Taking and Knowledge Management

October 20, 2022 (Updated: August 17, 2024)

In the rapidly advancing field of productivity tools, Dendron emerges as a significant innovation in note-taking and knowledge management. As an open-source, cross-platform application, Dendron transcends the functionality of typical note-taking tools, offering a robust system designed for efficient organization, structuring, and interconnection of ideas.

Distinctive Features of Dendron

Dendron uniquely blends the simplicity of plain text with the sophistication of a structured database. Below are the key features that make it particularly appealing to knowledge workers, developers, and creatives:

- Hierarchical Note Structure: Dendron utilizes a unique hierarchical system, enabling users to organize their notes in a manner akin to a well-structured file system. This method facilitates easy categorization and retrieval of information, even as the knowledge base expands.

- Advanced Search Capabilities: Dendron's lookup feature allows for the rapid retrieval of notes, ensuring that information is accessible within milliseconds, regardless of the size of the vault (collection of notes).

- Flexible Note Linking: Users can effortlessly create bidirectional links between notes, forming a personal knowledge graph that mirrors cognitive connections.

- Markdown Support: Dendron's support for Markdown enhances note formatting, making it simple to include code snippets, tables, and more within the notes.

- Version Control Integration: Seamlessly integrated with Git, Dendron supports version tracking, collaboration, and secure backups of the knowledge base.

Maximizing Productivity with Dendron

1. Customization for Diverse Workflows

Dendron offers flexibility, allowing users to tailor their note-taking workflow to meet specific needs. Whether documenting code, organizing academic research, or outlining creative projects, Dendron adapts to the user's workflow.

2. Integration with Development Environments

Dendron's integration with Visual Studio Code is particularly beneficial for developers, offering direct access to the entire knowledge base within the coding environment.

3. Open-Source Adaptability

As an open-source platform, Dendron invites contributions from its user community, allowing for the creation of plugins, customization, and continuous improvement. This adaptability ensures that Dendron remains a versatile tool in the knowledge management ecosystem.

4. Ensuring Knowledge Longevity

With its foundation in plain text and open-source principles, Dendron guarantees the longevity and portability of knowledge, free from proprietary constraints or vendor lock-in.

Steps to Begin Using Dendron

For those ready to explore Dendron, the following steps outline the initial process:

- Installation: Dendron can be installed as an extension for Visual Studio Code or used via the Dendron CLI for a standalone experience.

- Creating a Vault: A vault serves as a personal knowledge database. Users can create multiple vaults to separate different domains of their life or work.

- Learning the Fundamentals: Start by creating notes, utilizing the hierarchical structure, and linking notes. The official Dendron documentation provides comprehensive guidance.

- Exploring Advanced Features: As familiarity with the system grows, users can explore advanced features such as schemas, publishing, and custom snippets to enhance their note-taking capabilities.

- Engaging with the Community: The Dendron Discord community offers a platform for users to seek assistance, share insights, and connect with others focused on knowledge management.

Conclusion: The Potential of Dendron in Knowledge Management

Dendron presents a compelling solution for those seeking to overcome the limitations of traditional note-taking applications. Its innovative approach, combining hierarchical organization with advanced search and linking functionalities, positions Dendron as an essential tool for effective personal knowledge management.

This article was prepared for professionals and knowledge workers interested in advanced note-taking and knowledge management systems.

Enhancing Classification Performance with Synthetic Data Generation: A Comprehensive Study

Read more

Enhancing Classification Performance with Synthetic Data Generation: A Comprehensive Study

June 2022

Project Overview

In the ever-evolving field of machine learning, the quality and quantity of training data play a crucial role in model performance. This project delves into the potential of synthetic data generation to improve the accuracy and robustness of machine learning classifiers. By conducting a comparative analysis of multiple data augmentation techniques, we aim to provide researchers and practitioners with valuable insights to make informed decisions about which method best suits their specific classification tasks.

Key Objectives

- Evaluate the effectiveness of various synthetic data generation methods

- Compare the impact of different augmentation techniques on classifier performance

- Provide actionable insights for researchers to optimize their data augmentation strategies

Datasets Utilized

To ensure a comprehensive evaluation, we employed three distinct datasets, each comprising binary classification tasks with varying sample sizes:

- PC dataset: The PC dataset is a curated corpus designed specifically for sentiment analysis in the domain of electronic devices, encompassing a range of products such as laptops, cameras, and cell phones. This dataset consists of a diverse collection of customer-generated reviews, each of which has been annotated with sentiment labels denoting either a "positive" or "negative" sentiment towards the scrutinized product.

- CR dataset: Similar to the PC dataset, the CR dataset, which stands for Customer Reviews, constitutes a collection of consumer-generated evaluations encompassing a wide spectrum of products. These assessments have been systematically categorized into either "positive" or "negative" sentiments, thereby assigning sentiment polarity labels to them.

- SST‐2: SST-2 dataset, or Stanford Sentiment Treebank 2, constitutes a compilation of movie reviews, each labelled with a binary sentiment classification: 1 designating a positive sentiment and 0 representing a negative sentiment. This dataset encompasses a wide array of movie reviews, spanning different films, and is accompanied by brief descriptions of the movies under discussion. The content encapsulated within the SST-2 reviews is diversified, encompassing evaluations of movie quality, acting performances, special effects, and plot intricacies. This broad spectrum of topics covered in the reviews highlights their comprehensive nature. This dataset doesn’t solely encompass starkly polarized sentiments; it also includes reviews that express sentiments falling within the continuum between pure positivity and negativity.

These diverse datasets serve as the foundation for assessing the effectiveness of different synthetic data generation methods across various scenarios.

Augmentation Techniques Explored

We rigorously tested four state-of-the-art data augmentation techniques:

- AEDA (An Easier Data Augmentation):

- Methodology: Injects random punctuation marks at random positions within sentences

- Purpose: Introduces linguistic variability without altering core meaning

- EDA (Easy Data Augmentation):

- Methodology: Applies four operations randomly—synonym replacement, random insertion, random swap, and random deletion

- Purpose: Adds diversity to the training data while preserving semantic content

- WordNet:

- Methodology: Leverages a comprehensive linguistic database to replace words with their synonyms

- Purpose: Enriches vocabulary and introduces semantic variations

- Back Translation:

- Methodology: Translates sentences into another language (e.g., German or French) and then back into the original language (English)

- Purpose: Creates new, slightly altered versions of sentences while maintaining overall meaning

Visualization: Dimensionality Reduction with t-SNE

To gain deeper insights into the patterns within our augmented datasets, we employed t-distributed Stochastic Neighbor Embedding (t-SNE). This powerful technique allows us to:

- Visualize high-dimensional data in 2D or 3D space

- Preserve the local structure of the original high-dimensional data

- Identify clusters and relationships that might be hidden in the raw data

Through t-SNE visualization, we were able to observe the impact of different augmentation techniques on the overall data distribution and class separability.

Results and Key Findings

Our rigorous testing yielded several significant insights:

- Performance Metrics: We evaluated the impact of synthetic data on classifier performance using key metrics such as accuracy, precision, recall, and F1 score.

- Technique Effectiveness: Different augmentation techniques showed varying levels of effectiveness across datasets:

- AEDA excelled in scenarios where minor linguistic variability significantly influenced classifier performance, such as the SST-2 dataset.

- EDA showed promise for enhancing classification tasks where semantic preservation is crucial, particularly in the PC dataset.

- WordNet was particularly effective in enriching vocabulary and improving performance on the CR dataset, where diverse expressions of sentiment were prevalent.

- Back Translation provided the best results in cases where subtle semantic changes could lead to improved generalization, such as in sentiment analysis across all datasets.

- Dataset-Specific Insights: We observed that certain augmentation methods were particularly effective for specific types of datasets, highlighting the importance of tailored approaches.

Conclusions and Future Directions

This study demonstrates that data augmentation can lead to substantial improvements in classifier performance when applied judiciously. Key takeaways include:

- The importance of selecting augmentation techniques based on dataset characteristics and classification task requirements

- The potential for combining multiple augmentation methods to achieve optimal results

- The need for continued research into novel data augmentation strategies

Looking ahead, we envision expanding this research to include:

- Exploration of advanced augmentation techniques, such as generative models

- Investigation of domain-specific augmentation strategies

- Development of automated frameworks for selecting optimal augmentation pipelines

By providing these insights, we aim to empower researchers and practitioners in the field of machine learning to enhance the robustness and reliability of their classification models through effective synthetic data generation.